Running Tests Automatically with CI Pipelines | E2E Unit Testing

Automated testing is an essential part of modern software development. By integrating tests into CI pipelines, teams can catch bugs early, reduce manual effort, and maintain confidence in their code. Whether it’s running unit tests for small chunks of logic, integration tests to verify modules work together, or end-to-end (E2E) tests to simulate real user scenarios, a well-thought-out CI testing strategy is key to shipping high-quality applications.

This guide covers how to run tests automatically in CI pipelines. We’ll discuss unit, integration, and E2E testing, generating test reports and coverage metrics, coping with flaky tests, and creating an effective CI feedback loop for developers.

Table of Contents

- What is Automated Testing in CI Pipelines?

- Unit, Integration, and End-to-End (E2E) Testing in CI

- Generating Test Reports and Measuring Coverage

- Handling Flaky Tests

- Building a Developer-Friendly CI Feedback Loop

- Best Practices

- Final Thoughts

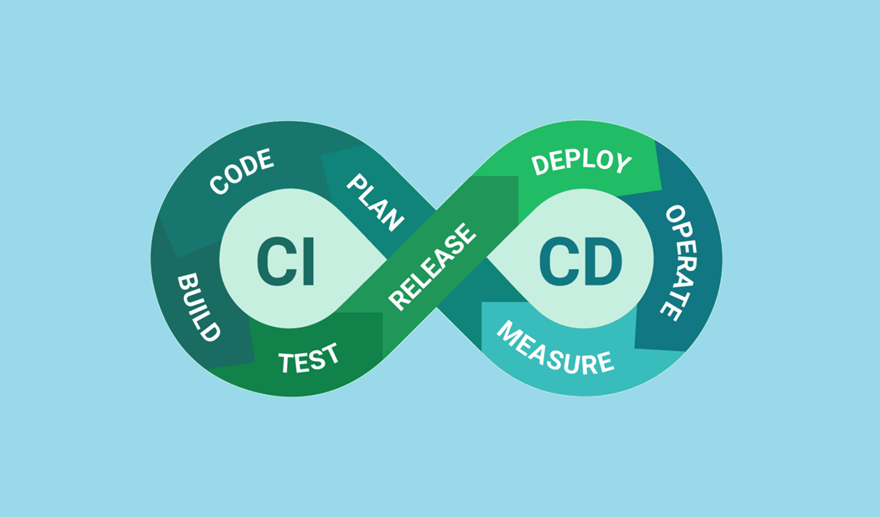

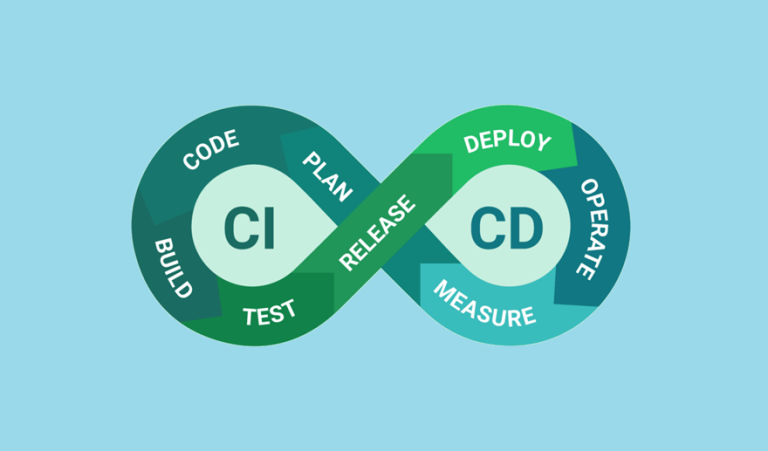

What is Automated Testing in CI Pipelines?

Automated testing in CI pipelines refers to the practice of integrating various types of tests (unit, integration, E2E) into the CI workflow so that they’re executed whenever new code is added, ensuring early detection of issues.

Benefits include:

- Immediate feedback: Developers can quickly identify and fix problems.

- Improved quality control: Automated tests prevent broken code from reaching production.

- Team velocity: Testing is seamless and scales with the project size.

Unit, Integration, and End-to-End (E2E) Testing in CI

Unit Testing

What it is:

Unit testing involves testing individual components or functions in isolation, typically using mocks and stubs to simulate their dependencies.

Why include it in CI Pipelines?

- Fast to execute.

- Catches logic bugs early.

Example for a Spring Boot Service:

Assume we have a CalculatorService in our Spring Boot application.

Service Code (CalculatorService.java):

public class CalculatorService {

public int add(int a, int b) {

return a + b;

}

}

Unit Test (CalculatorServiceTest.java):

import static org.junit.jupiter.api.Assertions.assertEquals;

import org.junit.jupiter.api.Test;

public class CalculatorServiceTest {

private final CalculatorService calculator = new CalculatorService();

@Test

void testAdd() {

assertEquals(5, calculator.add(2, 3));

}

}

Add mvn test to your CI file to run this test in isolation:

test_job:

stage: test

script:

- mvn test

Integration Testing

What it is:

Integration tests validate that multiple components work together correctly. These typically involve databases, APIs, or services interacting in a test environment.

Why include it in CI Pipelines?

- Ensures modules can coexist.

- Prevents issues in non-isolated environments.

Example Using a Spring Boot Web Layer Test:

Service Code (HelloController.java):

@RestController

@RequestMapping("/api")

public class HelloController {

@GetMapping("/hello")

public String sayHello() {

return "Hello, CI!";

}

}

Test (HelloControllerIntegrationTest.java):

import static org.hamcrest.Matchers.equalTo;

import org.junit.jupiter.api.Test;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.autoconfigure.web.servlet.WebMvcTest;

import org.springframework.test.web.servlet.MockMvc;

import static org.springframework.test.web.servlet.request.MockMvcRequestBuilders.get;

import static org.springframework.test.web.servlet.result.MockMvcResultMatchers.*;

@WebMvcTest(HelloController.class)

public class HelloControllerIntegrationTest {

@Autowired

private MockMvc mockMvc;

@Test

void testSayHello() throws Exception {

mockMvc.perform(get("/api/hello"))

.andExpect(status().isOk())

.andExpect(content().string(equalTo("Hello, CI!")));

}

}

Add integration tests to the pipeline under a dedicated job:

integration_test_job:

stage: test

script:

- mvn test -Pintegration

End-to-End Testing

What it is:

End-to-End (E2E) tests simulate real-world user interactions across multiple systems or services in the application.

Why include it in CI Pipelines?

- Validates the entire app workflow.

- Catches user-facing bugs.

Popular Frameworks:

- Cypress (JS for web apps)

- Selenium (UI-based)

Example Cypress Test:

Test a login feature:

describe('Login Feature', () => {

it('logs in a user successfully', () => {

cy.visit('/login');

cy.get('input[name="username"]').type('testuser');

cy.get('input[name="password"]').type('password123');

cy.get('button[type="submit"]').click();

cy.contains('Welcome, testuser');

});

});

Add Cypress to CI workflows to run browser tests in a headless mode.

Generating Test Reports and Measuring Coverage

Test Report Examples

Most CI/CD tools allow you to collect and display test results. Generate reports in formats like JUnit XML or HTML.

Add Maven Surefire plugin to gather test results:

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>3.0.0</version>

<configuration>

<reportsDirectory>${project.build.directory}/surefire-reports</reportsDirectory>

</configuration>

</plugin>

</plugins>

</build>

Direct GitLab or Jenkins to display these reports.

Code Coverage

Collect coverage metrics using plugins like:

- Jacoco (Java)

- Istanbul (JavaScript)

Integrate Jacoco with Maven:

<plugin>

<groupId>org.jacoco</groupId>

<artifactId>jacoco-maven-plugin</artifactId>

<version>0.8.8</version>

</plugin>

Track coverage in GitLab pipelines:

artifacts:

reports:

coverage_report:

coverage_format: cobertura

path: target/site/jacoco/jacoco.xml

Handling Flaky Tests

Flaky tests fail inconsistently, often caused by:

- Networking delays

- Outdated data dependencies

- Poorly written test scripts

Strategies to Handle Flaky Tests

- Retry Mechanisms

Enable retries for suspected flaky tests.retries: max_retries: 2 - Isolate Environment Externalities

Use mocks or stubs instead of calling live services during tests. - Quarantine Flaky Tests

Tag flaky tests so they don’t block pipeline progress. - Monitor and Fix Recurring Errors

Visualize test trends with tools like Allure or Test Analytics.

Building a Developer-Friendly CI Feedback Loop

The faster developers receive feedback, the faster they can address issues. Here’s how to ensure an efficient feedback loop:

- Pipeline Alerts

Use notifications (e.g., email, Slack) to inform developers of test failures. - Split Pipelines by Importance

Run fast tests (unit) on every commit and reserve slower tests (integration, E2E) for nightly builds. - Clear Error Reporting

Attach logs or screenshots for failed tests as job artifacts. - Actionable Feedback

Provide coverage gaps and test suggestions during merge requests.

Best Practices

- Optimize Test Execution

Parallelize tests to reduce pipeline duration. - Keep Test Data Fresh

Regularly reset test databases or use disposable ones. - Prioritize Critical Tests

Focus on running tests that cover high-traffic code paths. - Integrate Performance Tests

Periodically include load tests in CI pipelines to ensure scalability.

Final Thoughts

Running tests automatically in CI pipelines not only saves time but also creates a culture of quality and accountability. From unit tests catching bugs in isolated components, to integration and E2E tests validating the entire system, automated pipelines keep developers confident in their codebase.

Invest in tools to manage flaky tests, generate meaningful reports, and design fast feedback loops for the team. By building a solid CI testing strategy, you’ll enable rapid, reliable development and deployment cycles for your project!